When it comes to diamond clarity, the terms VVS1 and VVS2 are often mentioned. These clarity grades represent excellent quality diamonds with minimal inclusions. In this comprehensive guide, we will delve into the similarities, differences, importance, and cost factors associated with VVS1 and VVS2 diamonds. By gaining a deeper understanding, you will be well-informed to make a confident purchase of these exquisite diamonds from reputable online sellers.

What is Diamond Clarity?

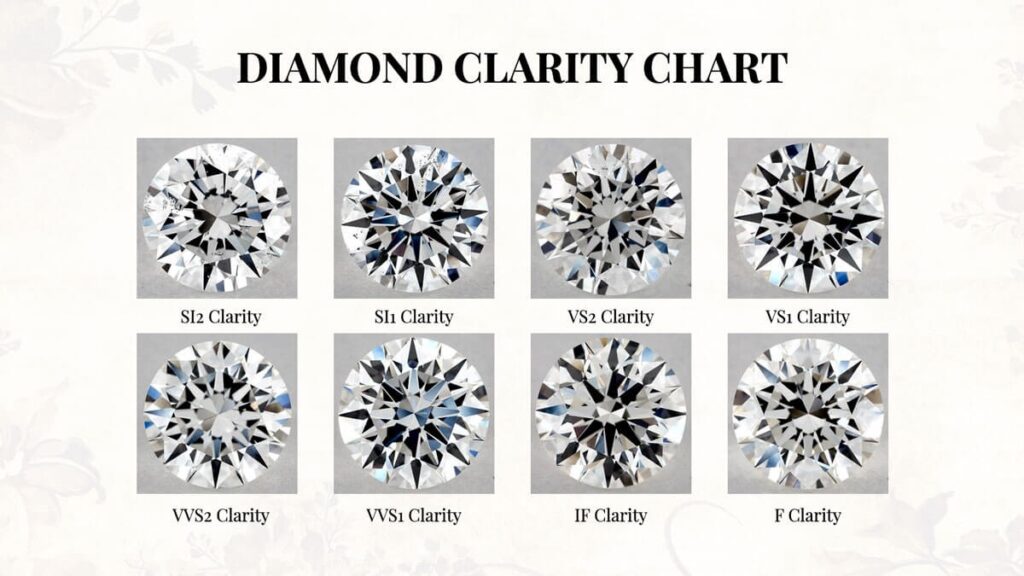

Diamond clarity refers to the presence of internal characteristics, known as inclusions, and external characteristics, known as blemishes, within a diamond. The Gemological Institute of America (GIA) uses a clarity grading scale to assess and communicate the level of these characteristics in a diamond. The higher the clarity grade, the fewer and less visible the inclusions or blemishes.

Similarities between VVS1 and VVS2 Clarity

Both VVS1 and VVS2 diamonds offer exceptional clarity and visual appeal. They share several similarities, including:

Inclusion Visibility: Both VVS1 and VVS2 diamonds have inclusions and blemishes that are difficult to detect even under magnification. These imperfections are minimal and do not significantly affect the overall beauty and brilliance of the diamond.

Brilliance: VVS1 and VVS2 diamonds exhibit remarkable brilliance and sparkle due to their high clarity. With fewer inclusions, light can pass through the diamond more freely, resulting in exceptional light performance.

Differences between VVS1 and VVS2 Clarity

While VVS1 and VVS2 are closely related in terms of clarity, there are subtle differences that set them apart:

Inclusion Location and Type: VVS1 diamonds typically have inclusions that are located towards the edges or pavilion (bottom) of the diamond. These inclusions are often less visible from the top view. On the other hand, VVS2 diamonds may have inclusions that are slightly more visible than VVS1, potentially located closer to the center or table (top) of the diamond.

Size and Quantity of Inclusions: VVS1 diamonds generally have smaller and fewer inclusions compared to VVS2 diamonds. VVS2 diamonds may contain slightly larger or more numerous inclusions, although they are still minimal and not readily visible to the naked eye.

Importance of VVS1 and VVS2 to the Buyer

Choosing between VVS1 and VVS2 diamonds depends on personal preferences and budget considerations. Both grades offer exceptional clarity and beauty, providing an excellent balance between quality and value. It’s essential to prioritize your preferences and understand that the differences between these grades are often microscopic and not readily noticeable to the naked eye.

The selection of VVS1 or VVS2 diamonds ensures that you are acquiring a diamond of outstanding clarity, allowing for maximum light reflection and brilliance. Whether you opt for VVS1 or VVS2, you can be confident in owning a diamond that is of high quality and visually stunning.

Cost Factors

The cost of a diamond is influenced by various factors, including carat weight, cut quality, color grade, and clarity grade. In general, VVS1 diamonds are priced slightly higher than VVS2 diamonds due to their superior clarity. The extremely minimal and hard-to-detect inclusions in VVS1 diamonds make them rarer and more valuable. However, it’s worth noting that pricing can also vary based on other factors such as diamond shape, fluorescence, and market demand. Click here to find the price ranges.

Expert Grading and Certification

When purchasing a VVS1 or VVS2 diamond, it is crucial to ensure that the diamond has been graded by a reputable gemological laboratory, such as the GIA or AGS. These independent institutions provide objective and reliable assessments of a diamond’s clarity and other quality characteristics. A diamond certification verifies the diamond’s authenticity, quality, and adherence to industry standards.

Trustworthy Online Diamond Sellers

When purchasing a VVS1 or VVS2 diamond, it is crucial to choose reputable online diamond sellers. Look for sellers who provide detailed information about the diamonds they offer, including GIA or other reputable laboratory certifications. Reputable sellers should have positive customer reviews, transparent pricing, and a wide selection of VVS1 and VVS2 diamonds to choose from.

Importance of Clarity in VVS1 and VVS2 Diamonds

The clarity of a diamond plays a significant role in its overall beauty and value. VVS1 and VVS2 diamonds are highly sought after due to their exceptional clarity grades. The minimal inclusions and blemishes in these diamonds ensure that they are nearly flawless to the naked eye, resulting in stunning visual appeal and maximum light performance.

The high clarity of VVS1 and VVS2 diamonds enhances their brilliance, allowing light to pass through the stone with minimal obstruction. This results in an impressive display of sparkle and fire, captivating the beholder. Whether you choose a VVS1 or VVS2 diamond, you can be confident that the diamond will possess remarkable clarity, adding to its allure.

Considerations for Choosing Between VVS1 and VVS2 Diamonds

When deciding between a VVS1 and VVS2 diamond, there are a few factors to consider:

Personal Preference: Your personal taste and aesthetic preference play a crucial role in determining which clarity grade is more appealing to you. Some individuals may prefer the slightly higher clarity of a VVS1 diamond, while others may find the minor differences in clarity between VVS1 and VVS2 negligible.

Budget: The price of a diamond is influenced by various factors, including its clarity grade. VVS1 diamonds tend to be slightly more expensive than VVS2 diamonds due to their slightly higher clarity grade. Consider your budget and determine which grade aligns with your financial considerations while still providing the desired level of clarity.

Size and Setting: The size of the diamond and the chosen setting can affect the visibility of inclusions. Larger diamonds may make inclusions more noticeable, whereas smaller diamonds may hide them better. Additionally, certain settings, such as halo or pavé, can camouflage slight differences in clarity between VVS1 and VVS2 diamonds.

Certification: To ensure the authenticity and quality of your VVS1 or VVS2 diamond, always look for diamonds accompanied by a reputable diamond grading certificate, such as those issued by the GIA. This certification provides an unbiased evaluation of the diamond’s clarity and other quality characteristics.

Conclusion

Choosing between a VVS1 and VVS2 diamond requires careful consideration of personal preference, budget, and individual factors. Both clarity grades offer exceptional quality and beauty, with minimal inclusions and exceptional brilliance. By understanding the similarities and differences between VVS1 and VVS2 diamonds and working with reputable online sellers, you can confidently select a diamond that meets your desired clarity standards.

Remember, the choice between VVS1 and VVS2 diamonds ultimately comes down to personal preference and the level of clarity that resonates with you. With their exceptional clarity grades, VVS1 and VVS2 diamonds are sure to captivate and delight, serving as a symbol of timeless beauty and elegance.…